In a tech startup industry that loves its shiny new objects, the term “Big Data” is in the unenviable position of sounding increasingly “3 years ago”. While Hadoop was created in 2006, interest in the concept of “Big Data” reached fever pitch sometime between 2011 and 2014. This was the period when, at least in the press and on industry panels, Big Data was the new “black”, “gold” or “oil”. However, at least in my conversations with people in the industry, there’s an increasing sense of having reached some kind of plateau. 2015 was probably the year when the cool kids in the data world (to the extent there is such a thing) moved on to obsessing over AI and its many related concepts and flavors: machine intelligence, deep learning, etc.

Beyond semantics and the inevitable hype cycle, our fourth annual “Big Data Landscape” (scroll down) is a great opportunity to take a step back, reflect on what’s happened over the last year or so and ponder the future of this industry.

In 2016, is Big Data still a “thing”? Let’s dig in.

Enterprise Technology = Hard Work

The funny thing about Big Data is, it wasn’t a very likely candidate for the type of hype it experienced in the first place.

Products and services that receive widespread interest beyond technology circles tend to be those that people can touch and feel, or relate to: mobile apps, social networks, wearables, virtual reality, etc.

But Big Data, fundamentally, is… plumbing. Certainly, Big Data powers many consumer or business user experiences, but at its core, it is enterprise technology: databases, analytics, etc: stuff that runs in the back that no one but a few get to see.

And, as anyone who works in that world knows, adoption of new technologies in the enterprise doesn’t exactly happen overnight.

The early years of the Big Data phenomenon were propelled by a very symbiotic relationship among a core set of large Internet companies (in particular Google, Yahoo, Facebook, Twitter, LinkedIn, etc), which were both heavy users and creators of a core set of Big Data technologies. Those companies were suddenly faced with unprecedented volume of data, had no legacy infrastructure and were able to recruit some of the best engineers around, so they essentially started building the technologies they needed. The ethos of open source was rapidly accelerating and a lot of those new technologies were shared with the broader world. Over time, some of those engineers left the large Internet companies and started their own Big Data startups. Other “digital native” companies, including many of the budding unicorns, started facing similar needs as the large Internet companies, and had no legacy infrastructure either, so they became early adopters of those Big Data technologies. Early successes led to more entrepreneurial activity and more VC funding, and the whole thing was launched.

Fast forward a few years, and we’re now in the thick of the much bigger, but also trickier, opportunity: adoption of Big Data technologies by a broader set of companies, ranging from medium-sized to the very largest multinationals. Unlike the “digital native” companies, those companies do not have the luxury of starting from scratch. They also have a lot more to lose: in the vast majority of those companies, the existing technology infrastructure “does the trick”. It may not have all the bells and whistles, and many within the organization understand that it will need to be modernized sooner rather than later, but they’re not going to rip and replace their mission critical systems overnight. Any evolution will require processes, budgets, project management, pilots, departmental deployments, full security audits, etc. Large corporations are understandingly cautious about having young startups handle critical parts of their infrastructure. And, to the despair of some entrepreneurs, many (most?) still stubbornly refuse to move their data to the cloud, at least the public one.

Another key thing to understand: Big Data success is not about implementing one piece of technology (like Hadoop or anything else), but instead requires putting together an assembly line of technologies, people and processes. You need to capture data, store data, clean data, query data, analyze data, visualize data. Some of this will be done by products, and some of it will be done by humans. Everything needs to be integrated seamlessly. Ultimately, for all of this to work, the entire company, starting from senior management, needs to commit to building a data-driven culture, where Big Data is not “a” thing, but “the” thing.

In other words: lots of hard work.

The Deployment Phase

The above explains why, a few years after many of the high profile startups were launched and the headline-grabbing VC investments made, we are just hitting the deployment and early maturation phase of Big Data.

The more forward-thinking large companies (call them the “early adopters” in a traditional technology adoption cycle) started early experimentation with Big Data technologies sometime in 2011-2013, launching Hadoop pilots (often because it was the chic thing to do) or trying out point solutions. They hired all sorts of people whose job titles didn’t exist previously (such as “data scientist” or “chief data officer”). They went through various types of efforts, including dumping all their data in one central repository or “data lake”, sometimes hoping that magic would ensue (it generally didn’t). They gradually built internal competencies, experimented with different vendors, went from pilots to departmental deployments in production and are now debating (or, more rarely, implementing) enterprise-wide roll outs. In many cases, they are at an important inflection point where, after several years building Big Data infrastructure, they don’t have (yet) much to show for it, at least from the perspective of the business user in their companies. But a lot of the thankless work has been done, and the disproportionately impactful phase where applications are deployed on top of the core architecture is now starting.

The next set of large companies (call them the “early majority” in the traditional technology adoption cycle) has been staying on the sidelines for the most part, and is still looking at this whole Big Data thing with some degree of puzzlement. Up until recently, they were hoping that a large vendor (e.g., an IBM) would offer a one-stop-shop solution, but it’s starting to look like that may not happen anytime soon. They look at something like our Big Data Landscape with horror, and wonder whether they seriously need to work with all those startups that often sound the same, and cobble those solutions together. They’re trying to figure out whether they should work sequentially and progressively, building the infrastructure first, then the analytics then the application layer, or do everything at the same time, or wait until something much easier shows up on the horizon.

The Ecosystem is Maturing

Meanwhile, on the startup/vendor side, the whole first wave of Big Data companies (those that were founded in, say, 2009 to 2013) have now raised multiple VC financing rounds, scaled their organizations, learned from successes and failures in early deployments, and now offer more mature, battle-tested products. A handful are now public companies (including HortonWorks and New Relic which did their IPO in December 2014) while others (Cloudera, MongoDB, etc.) have raised hundreds of millions of dollars.

VC investment in the space remains vibrant and the first few week of weeks of 2016 saw a flurry of announcements of big founding rounds for late stage Big Data startups: DataDog ($94M), BloomReach ($56M), Qubole ($30M), PlaceIQ ($25M), etc. Big Data startups received $6.64B in venture capital investment in 2015, 11% of total tech VC.

M&A activity has remained moderate (we noted 35 acquisitions since our last landscape, see notes below).

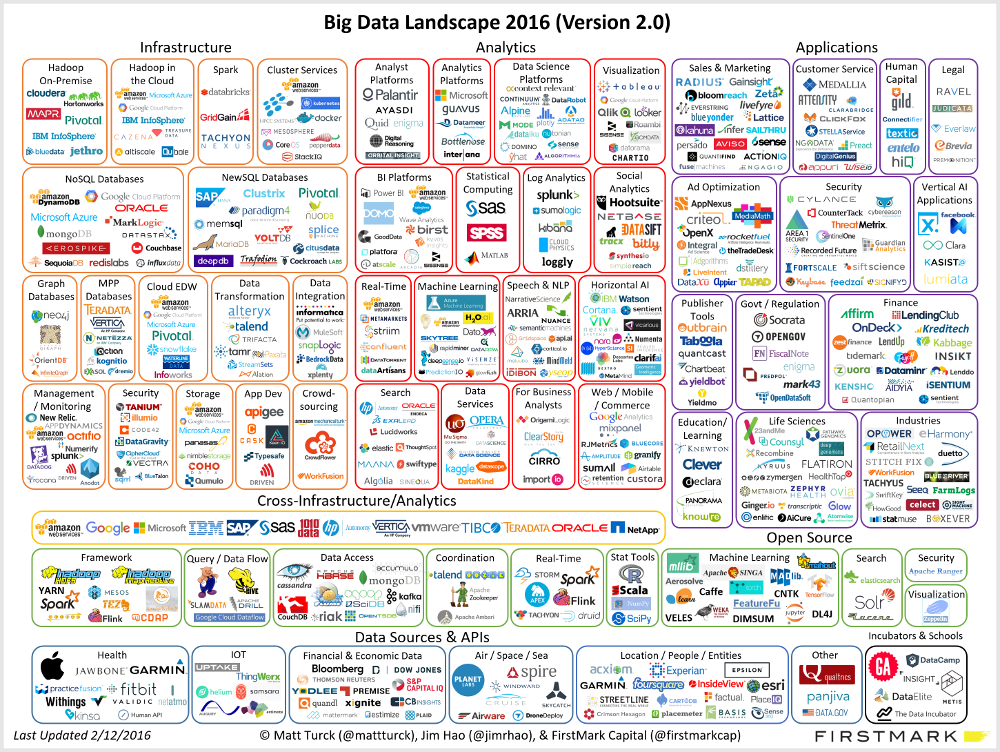

With continued influx of entrepreneurial activity and money in the space, reasonably few exits, and increasingly active tech giants (Amazon, Google and IBM in particular), the number of companies in the space keeps increasing, and here’s what the Big Data Landscape looks like in 2016:

To see the landscape at full size, click here. To view a full list of companies, click here. [Note: This is version 2.0 of the landscape and list, both revised as of February 12, 2016]

Obviously, that’s a lot of companies, and many others were not included in the chart, deliberately or not (scroll to the bottom of the post for a few notes on methodology).

In terms of fundamental trend, the action (meaning innovation, launch of new products and companies) has been gradually moving left to right, from the infrastructure layer (essentially the world of developers/engineers) to the analytics layer (the world of data scientists and analysts) to the application layer (the world of business users and consumers) where “Big Data native applications” have been emerging rapidly – following more or less the pattern we expected.

Big Data infrastructure: Still Plenty of Innovation

It’s now been a decade since Google’s papers on MapReduce and BigTable led Doug Cutting and Mike Cafarella to create Hadoop, so the infrastructure layer of Big Data has had the most time to mature and some key problems there have now been solved.

However, the infrastructure space continues to thrive with innovation, in large part through considerable open source activity.

2015 was without a doubt the year of Apache Spark, an open source framework leveraging in-memory processing, which was starting to get a lot of buzz when we published the previous version of our landscape. Since then, Spark has been embraced by a variety of players, from IBM to Cloudera, giving it considerable credibility. Spark is meaningful because it effectively addresses some of the key issues that were slowing down the adoption of Hadoop: it is much faster (benchmarks have shown Spark is 10 to 100 times faster than Hadoop’s MapReduce), easier to program, and lends itself well to machine learning. (For more on Spark, see our fireside chat at our Data Driven NYC monthly event with Ion Stoica, one of the key Spark pioneers and CEO of Spark in the cloud company Databricks, here).

Other exciting frameworks continue to emerge and gain momentum, such as Flink, Ignite, Samza, Kudu, etc. Some thought leaders think the emergence of Mesos (a framework to “program against your datacenter like it’s a single pool of resources”) dispenses for the need for Hadoop altogether (watch a great talk on the topic by Stefan Groschupf, CEO of Datameer, here and learn more about Mesos by watching Tobi Knaupf of Mesosphere here).

Even in the world of databases, that seemed to have seen more emerging players than the market could possibly sustain, plenty of exciting things are happening, from the maturation of graph databases (watch Emil Eifrem, CEO Neo4j here), the launch of specialized databases (watch Paul Dix, founder of time series database InfluxDB here) to the emergence of CockroachDB, a database inspired by Google Spanner, billed as offering the best of both the SQL and NoSQL worlds (watch Spencer Kimball, CEO of Cockroach Labs here). Data warehouses are evolving as well (watch Bob Muglia, CEO of cloud data warehouse Snowflake, here).

Big Data Analytics: Now with AI

The big trend over the last few months in Big Data analytics has been the increasing focus on artificial intelligence (in its various forms and flavors) to help analyze massive amounts of data and derive predictive insights.

The recent resurrection of AI is very much a child of Big Data. The algorithms behind deep learning (the area of AI that gets the most attention these days) were for the most part created decades ago, but it wasn’t until they could be applied to massive amounts of data cheaply and quickly enough that they lived up to their full potential (watch Yann LeCun, pioneer of deep learning and head of AI at Facebook, here). The relationship between AI and Big Data is so close that some industry experts now think that AI has regretfully “fallen in love with Big Data” (watch Gary Marcus, CEO of Geometric Intelligence here).

In turn, AI is now helping Big Data deliver on its promise. The increasing focus on AI/machine learning in analytics corresponds to the logical next step of the evolution of Big Data: now that I have all this data, what insights am I going to extract from it? Of course, that’s where data scientists come in – from the beginning their role has been to implement machine learning and otherwise come up with models to make sense of the data. But increasingly, machine intelligence is assisting data scientists – just by crunching the data, emerging products can extract mathematical formulas (watch Stephen Purpura, founder of Context Relevant here) or automatically build and recommend the data science model that’s most likely to yield the best results (watch Jeremy Achin, CEO of DataRobot here). A crop of new AI companies provide products that automate the identification of complex entities such as images (watch Richard Socher, CEO of MetaMind, here; Matthew Zeiler, CEO of Clarifai, here; and David Luan, CEO of Dextro here) or provide powerful predictive analytics (e.g., our portfolio company HyperScience, currently in stealth).

As unsupervised learning based products spread and improve, it will be interesting to see how their relationship with data scientists evolve – friend or foe? AI is certainly not going to replace data scientists any time soon, but expect to see increasing automation of the simpler tasks that data scientists perform routinely, and big productivity gains as a result.

By all means, AI/machine learning is not the only trend worth noting in Big Data analytics. The general maturation of Big Data BI platforms and their increasingly strong real-time capabilities is an exciting trend (watch Amir Orad, CEO of SiSense here; and Shant Hovespian, CTO of Arcadia Data here)

Big Data Applications: A Real Acceleration

As some of the core infrastructure challenges have been solved, the application layer of Big Data is rapidly building up.

Within the enterprise, a variety of tools has appeared to help business users across many core functions. For example, Big Data applications in sales and marketing help with figuring out which customers are likely to buy, renew or churn, by crunching large amounts of internal and external data, increasingly in real-time. Customer service applications help personalize service; HR applications help figure out how to attract and retain the best employees; etc.

Specialized Big Data applications have been popping up in pretty much any vertical, from healthcare (notably in genomics and drug research) to finance to fashion to law enforcement (watch Scott Crouch, CEO of Mark43 here).

Two trends are worth highlighting.

First, many of those applications are “Big Data Natives” in that they are themselves built on the latest Big Data technologies, and represent an interesting way for customers to leverage Big Data without having to deploy underlying Big Data technologies, since those already come “in a box”, at least for that specific function – for example, our portfolio company ActionIQ is built on Spark (or a variation thereof) , so its customers can leverage the power of Spark in their marketing department without having to actually deploy Spark themselves – no “assembly line” in this case.

Second, AI has made a powerful appearance at the application level as well. For example, in the cat and mouse game that is security, AI is being leveraged extensively to get a leg up on hackers and identify and combat cyberattacks in real time. “Artificially intelligent” hedge funds are starting to appear. A whole AI-driven digital assistant industry has appeared over the last year, automating tasks from scheduling meetings (watch Dennis Mortensen, CEO of x.ai here) to shopping to bringing you just about everything. The degree to which those solutions rely on AI varies greatly, ranging from near 100% automation to “human in the loop” situations where human capabilities are augmented by AI – nonetheless, the trend is clear.

Conclusion

In many ways, we’re still in the early innings of the Big Data phenomenon. While it’s taken a few years, building the infrastructure to store and process massive amounts of data was just the first phase. AI/machine learning is now precipitating a trend towards the emergence of the application layer of Big Data. The combination of Big Data and AI will drive incredible innovation across pretty much every industry. From that perspective, the Big Data opportunity is probably even bigger than people thought.

As Big Data continues to mature, however, the term itself will probably disappear, or become so dated that nobody will use it anymore. It is the ironic fate of successful enabling technologies that they become widespread, then ubiquitous, and eventually invisible.

____________________

NOTES:

1) First and foremost, a big thank you to our FirstMark associate Jim Hao who did a lot of the heavy lifting on this project and was immensely helpful

2) As it became very clear very quickly that we couldn’t possibly fit all companies we wanted on the chart, we ended up giving priority to startups that have raised one or several rounds of venture capital financing – certainly an imperfect criteria (but, hey, we’re VCs…), and we’ve occasionally made the editorial decision to include earlier stage startups when we thought they were particularly interesting.

3) As always, it is inevitable that we inadvertently missed some great companies in the process of putting this chart together. Did we miss yours? Feel free to add thoughts and suggestions in the comments

4) The chart is in png format, which should preserve overall quality when zooming, etc.

5) Disclaimer: I’m an investor through FirstMark in a number of companies mentioned on this Big Data Landscape, specifically: ActionIQ, Cockroach Labs, Helium, HyperScience, Kinsa, Sense360 and x.ai. Other FirstMark portfolio companies mentioned on this chart include Bluecore, Engagio, HowGood, Payoff. I’m a small personal shareholder in Datadog and LendingClub (pre-IPO).

6) Notable acquisitions (of all sizes) include Revolution Analytics (acquired by Microsoft in January 2015), Pentaho (acquired by Hitachi in February 2015), Mortar (acquired by Datadog in February 2015), Acunu and FoundationDB (both acquired by Apple in March 2015), AlchemyAPI (acquired by IBM in March 2015), Amiato (acquired by Amazon in April 2015), Next Big Sound (acquired by Pandora in May 2015), 1010Data (acquired by Advance/Newhouse in August 2015), Informatica (acquired/taken private by a company controlled by the Permira funds and Canada Pension Plan Investment Board (CPPIB) in August 2015), Boundary (acquired by BMC in August 2015), Bime Analytics (acquired by Zendesk in October 2015), CleverSafe (acquired by IBM in October 2015) ParStream (acquired by Cisco in November 2015), Lex Machina (acquired by LexisNexis in November 2015) and DataHero (acquired by Cloudability in January 2016).

Great article, but I would like to point out that Kx Systems’ kdb+ database, with its built-in programming language q, is a serious player in the Big Data space, but doesn’t fit into the categories used in this article. It has been widely adopted by financial services companies for over 20 years for high-performance analytics of streaming, real time and historical market data. It isn’t open source, nor is it technically NewSQL or NoSQL, it is SQL-like, so it seems to have slipped below your radar.

When you look at the relevance of players in the Big Data space another method could be their market share in industries, like financial services, where for decades leading firms have been investing heavily in kdb+, a proprietary software that gives them a competitive edge.

Great article! Not only this post capture the big data market pretty well but it also talk about its future.

Search combined with analytics can have a huge role in the coming years (bigger than the one highlighted in the landscape in my opinion). Especially because those type of technologies have been build to solve hard problems like text analysis, advanced NLP, connectivity to multiple applications, scalability, machine learning.. The foundation of AI and cognitive computing capabilities.

Kudos for quite an encyclopedic roundup! In essence, we’re at the “b.locking & tackling” stage of Big Data. Beyond the hype and the flashy pilot, it’s time for the more invisible task of productionalizing. You’ve painted a great picture of the emerging ecosystem, especially the emergence of AI tools and companies. My take: in the short to medium term, AI’s role will be assisting humans, not doing their thinking for them — which will be quite valuable in itself. And for the early majority, 2016 will be the year that they tackle how to REALLY build the Data Lake. Meaning, what governance, cataloging, lineage, security & lifecycle management will be involved. I’m just diving into that research now, but my take is that tools, technologies & best practices are works in progress.

So I’m curious who you see as being successful helping enterprises build & manage Data Lakes? Any leaders in the pack right now?

Excellent post and thanks so much for putting this together. I’d add sovrn to your Publisher Tools box, as we apply significant engineering and data science resources to the petabyte+ of data we collect per month to help our publisher base of 50K+ websites get smarter about their audiences and produce more compelling content. Thanks again!

thanks Matt for the article.

As per your comment, would like to add another contender in the machine learning space http://www.mldb.ai (disclosure: I work for datacratic)

thanks

alex

Excellent article and we couldn’t agree more at Coho Data. Many vendors will tell you to separate your data, your Enterprise Data from your Big Data Analytics. This will require two storage systems, the typical Enterprise storage system and the Data Lake. What Coho Data is doing is evolutionary, and we can because we represent a software defined data center approach utilizing SDN OpenFlow enabled swiches for all data placement, routing, and load balancing. Since we have rack-converged Intel multicore compute, Intel NVMe PCIe P3700 as our persistent flash layer, you don’t need to have separate storage domains. Your primary virtualized databases, exchange, and other key traditional applications can run normally during peak hours, and during off-peak hours, we can run your Big Data Analytics on the same storage. The financial benefits are enormous! 1. Your don’t need a Data Lake set up with separate compute and storage 2. You don’t need to traverse the data over the network from compute to storage and back — 3. With Coho Data, the HDFS runs inside vmNFS in a single easy to manage and dynamically growing datastore with a single IP address and target. HDFS runs right on the storage which includes compute! As we’ve seen in many DATA DRIVEN presentations, “Moving Big Data is Expensive” and “Push All of The Computation Down Close To The Data.” This is exactly what Coho Data is doing. If you are allergic to doing business with a storage startup, HP has invested in us and we’re working out the final details on their go to market strategy with Coho Data. Intel also invested in us and brought us into UBS Bank to help them with their Big Data Analytics challenges:

UBS Big Data Analytics Case Study: http://www.cohodata.com/pdfs/ubs-coho-success-story.pdf

UBS Hadoop Reference Architecture Whitepaper:

http://www.cohodata.com/pdfs/coho-solutions-reference-architecture.pdf

Finally, our storage architecture is positioned to be the bridge between supporting the old gard applications like Oracle, SQL, Exchange, etc. and the New Emerging Applications like Docker, Spark, Hadoop, Tableau, Splunk, Kubernetes, etc.

For more information please contact me.

Note: As mentioned, our architecture is SDDC and based on the NVMe PCIe bus, so we will be able to leverage today’s system to integrate NVDIMM and 3D Xpoint from Intel/Micron when it comes to market.

Great article, Matt. I’d humbly suggest that http://www.redsqirl.com, the new analytical framework for big data analytics is one to watch. Red Sqirl will be unique in some important ways, when it launches later this year. It’s currently in open Beta.

Hope to see you including the Sqirl in your 2017 version!

Simon

AWS — one of the easiest options for dipping a company’s toe in the water with big data — was not mentioned, yet their services are inexpensive (for test apps), they have great support and lots of AWS Meetups are cropping up all over the country to help people get started. (I’m not affiliated with them, just impressed with their support for customers).

Great Pic. A few corrections: Looker needs to moved to data vizualization, Talend should be moved to data transformations, Tamr should be moved to machine learning, Eliza should be added to Speech, and HP Vertica really needs to added to a couple places (definitely new SQL, probably Cross-Infrastructure / Analytics.

Nice job aggregating many complex, independent stories. We should continue to thank Google for releasing so much research to the community, spawning this amazing disruption in data and analytics. For thirty years, RDBMS first enabled, then inhibited a data driven world. Its exciting to see that the past 10 years have spawned so many solutions to this next generation of data expansion challenges. I am personally very excited about the next steps in the distributed, yet synchronized data world, and what that it will bring. We are close to: “Your data”, “My data” and the virtual access to a map of “Our shared data”. Data physically located in thousands / millions of hubs of private ownership is a reality 50 years in the making, yet new virtual fabrics with acceptable governance of data sharing and CRUD, will allow safe, communal use soon. Analytics over this superset of virtual, communal data will yield discoveries that we cannot even imagine today. We learn a lot by looking at our private data, we have learned how to enlarge our data collection for better analytics. But… we will learn infinitely more when we can look over the entire worlds contributed data and correlate it to the context of our private data!

For those in Big Data Analytics looking for GPU as a Service offerings that can help them achieve the performance they are looking for, may I suggest Cirrascale. With NYU, Nervana and Samsung all using their equipment, it seems like a great fit for those interested in GPU cloud services. http://www.gpuasaservice.com.

And don’t forget Actian too !

Actian’s in-Hadoop MPP database named Vector-H is winning many competitive POCs and new customers within the MPP Database, NewSQL and Cross Infrastructure categories listed here, as is our non-Hadoop MPP database named Matrix (formerly ParAccel).

I suggest including Converseon in the social analytics space. Converseon is long time leader in the space…forrester leader in enterprise social listening 2010, strong performer in 2012 and 2014. Dataweek Top Innovator in Social Data Mining. Well recognized as industry pioneer. Privately funded. It’s subsidary Revealed Context is a leader in text analytics utilizing machine/deep learning for highest industry precision, relevancy, recall.

Matt: Thanks again for shining a light on the compex Big Data Landscape.

Actian acquired Paraccel (formerly in your infographic) in 2013, and now ships a significantly enhanced version of that MPP database as Actian “Matrix” . Among the many Big Data users of Matrix we include Amazon, since “Matrix” is the underlying OEM engine for “Redshift”.

Actian also makes “VectorH” , an enterprise-class, extreme high-performance Analytic SQL Database on Hadoop, which could also appear in your “Hadoop On-Premise” section like the other databases.

Glad to see Rocana included under “management and monitoring.” It should also be listed under “log analytics.” TY.

Pls add Simularity’s Predictive Analytics AI to Horizontal AI. We handle not just big data but huge data for IoT.

Yes, a very interesting piece and the graphic in particular is worth studying. No doubt there will be some companies that have been missed off entirely and there are obviously lots included already – and I see in the Notes section at the end that priority has been given to startups. I would argue that many of the bigger players – likes of SAP, IBM & SAS – should appear in more of the boxes, but this is difficult to do when you’re looking to include startups and communicate the number of different players in the big data marketplace.

Excellent work. For your services category, take a look at Experfy, a Harvard-incubated platform with vetted data scientists providing on-demand consulting. Techcrunch calls us a “McKinsey in the cloud for Big Data Consulting” and Datanami calls us the “Uber of Big Data Projects.” See https://www.experfy.com/projects/category for a sample of projects currently underway on the platform.

Hey Matt – Great article, very thorough analysis. Have you seen what we do at Bit Stew? http://www.bitstew.com/products/ (Full disclosure: I work at Bit Stew) I’d say we’re definitely a contender for cross infrastructure analytics because of our work in the Industrial IoT.

In practice, big data has never “been a thing”, it’s always been a journey. The sooner we acknowledge that and start to talk about that journey, the sooner we can help companies to get value from their data.

While Hadoop was created in 2006, interest in the concept of “Big Data” reached fever pitch sometime between 2011 and 2014. Where is this information?

Great #BigData Analytics

Well Good idea its useful thing thanks for sharing this post, Lets have a look this WishWorks in this blog can get useful data on IT services and etc very helps us

Hi Matt,

Thanks for the survey.

In the survey WSO2 is not mentioned. Please see http://wso2.com/analytics and for more information. We offer solutions in batch analytics, realtime analytics (WSO2 CEP), and machine learning ( WSO2 ML). Our products are used by several fortune 500 companies.

I could provide more information as needed.

–Srinath

–Srinath

Great article… and it makes me ponder even more something I have been noticing lately. The use of big data and AI… it is generally just being used across big companies with lots of resources, smaller companies havent been able to keep up.. at least outside of the high tech world. Anyone else seeing both a big concern (and big opportunity) for the inability of the average sized company to keep pace with the ones out of the cutting edge? I know lots of these companies are out there selling their software, but in many cases to companies who dont have the people or even the culture to do some of the most basic things. I’m curious to hear what others think about this.

Matt…outstanding article and landscape chart. It would be interesting to have a similar chart for service providers in both horizontal and vertical markets. This is one startup that could go in the healthcare and pharma analytics vertical space: http://rxdatascience.com/