Should we be worried about the prospect of AI superintelligence taking over the world?

“In the real world, current-day robots struggle to turn doorknobs, and Teslas driven in ‘Autopilot’ mode keep rear-ending parked emergency vehicles […]. It’s as if people in the fourteenth century were worrying about traffic accidents, where good hygiene might have been a whole lot more helpful”.

This is one of my favorite quotes from “Rebooting AI: Building Artificial Intelligence We Can Trust,” a new book by Gary Marcus – scientist, NYU professor, New York Times bestselling author, entrepreneur – and his co-author Ernest Davis, Professor of Computer Science at the Courant Institute, NYU.

Gary did us a big honor recently: he chose to speak at Data Driven NYC on the evening of the publication of the book. He also signed a few copies. Our first book launch party!

Particularly if you’re trying to make sense of the still-ongoing hype around AI, including predictions of global gloom, Gary’s book is a fantastic read: a lucid, no-nonsense and occasionally provocative take on the current state of AI, that distills complex concepts into simple ideas, and includes plenty of interesting and often funny anecdotes.

The book builds on Gary’s earlier assessment of deep learning (see Deep Learning: A Critical Appraisal), and advocates for a hybrid approach to AI.

Below is the video of his talk at the event, plus a notes I derived from both the talk and the book. I’ll keep those brief as the book is worth reading in its entirety.

Mind the gap:

- The current rethoric about AI is almost messianic

- But current AI is narrow – it works for particular tasks, but it less promising in real-world situations

- What we have for now is digital idiot savants: software that can, for example, read bank checks, but does little else

- Therefore, AI has a trust problem: it is not broad enough to be trusted to perform in a full range of circumstances. It gets tricked by outliers.

- There is an “AI chasm” between ambition and reality.

- There is an urgent need for lay readers and policy makers to understand the true state of the art

What’s at stake:

- Current AI suffers from all sorts of issues: it is not robust, it can perpetuate social biases, it can be manipulated, it can end up with the wrong goals by applying instructions too literally without understanding the intent, etc.

Deep learning, and beyond:

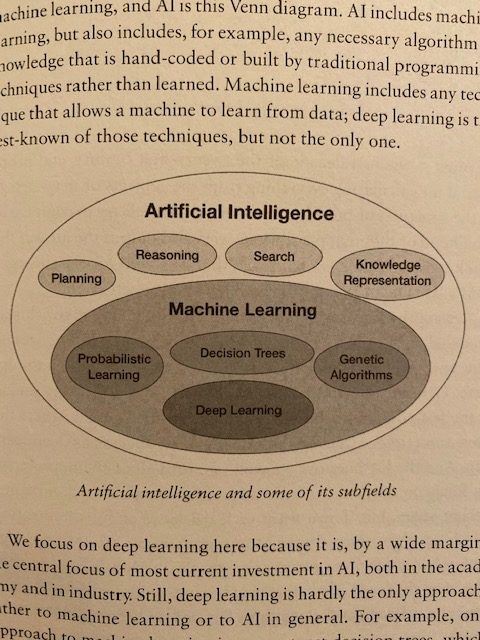

- Deep learning is, by a wide margin, the central focus of most current investment in AI

- The results from deep learning have been truly remarkable. It’s achieved state of the art in benchmark after benchmark, particularly in object recognition and speech recognition. But it’s proven to be astonishingly general beyond those.

- Still, deep learning faces three core problems: it is greedy (needs massive amounts of data), opaque (black box) and brittle (has issues with outliers, see example of the flipped-over school bus in the video)

- Bonus: simple chart from the book explaining the difference between AI and machine learning:

If computers are so smart, how come they can’t read?

- Machines cannot reliably comprehend even basic texts

- There are all sorts of complexities involved in reading, such as requiring a reader (human or otherwise) to follow a chain of inferences that are only implicit in the story\

- Search engines are one of AI’s biggest success stories, but they don’t understand what they are reading

- Deep learning doesn’t understand compositionality. One surprisingly hard challenge for it is just understanding the word not.

Where’s Rosie?

- When it comes to robots, we want Rosie, the all purpose robot from the Jetsons.

- There’s been real progress, and a lot of exciting hardware on the way, but we’re still far

- Localization has been a hard challenge despite the progress of GPS and techniques such as SLAM

- There are still challenges in three areas: assessing situations, predicting the probably future and deciding dynamically, as situations change, on the best course of action

Insights from the Human Mind:

- General intelligence will require both mechanisms like deep learning for recognizing images and machinery for handling reasoning and generalization, closer the mechanisms of classical AI and the world of rules and abstractions

- For example, complex cognitive creatures aren’t blank states. But within the field of machine learning, many see wiring anything innately as tantamount to cheating.

- The real advance in AI will start with an understanding of what kind of knowledge and representations should be built in prior to learning, in order to boostrap the rest.

- This will include the very difficult task of encoding knowledge and representing “common sense” in machines, including things like time, space, causality and ability to reason

Trust:

- Trust in AI has to start with good engineering practices (including designing for failure by incorporating fail-safes, mandated by laws and industry standards

- Program verification is promising route

- The AI scientists working on deep learning tend to design more by experimentation than theory

- To be trusted, machines need to be imbued by their creators with ethical values