If you follow the various talks at Data Driven NYC, and the data ecosystem on general, it’s plenty apparent that the overall tooling for data, data science and machine learning is still in its infancy, particularly compared to the software stack.

While this may feel ironic (yes, I really do think) given the billions in venture capital money that have been poured in the space, it’s worth remembering that the data stack (at least in its “big data” phase) is relatively recent (10-15 years), while the software stack has had several decades of evolution.

In many organizations, the data science and machine learning stack looks a collection of various tools, some open source, some proprietary, glued together with one-off scripts. Teams started experimenting with one tool, then another, then created ad hoc pathways to make it all work together over time, and before you knew it, you ended up with complex environments that are painful to manage.

In response to this situation, various machine learning frameworks have emerged to make abstract away the complexity. Several of those frameworks were developed internally at large tech comapanies to solve their own problems, and then open sourced.

Kedro is one such example. It was developed and maintained by QuantumBlack, an analytics consultancy acquired by McKinsey in 2015. It’s McKinsey’s first open-source product.

Kedro is somewhat hard to categorize. If it had its own category, it might be considered a Machine Learning Engineering Framework. What React did for front-end engineering code is what Kedro does for machine learning code. It allows you to build “design systems” of reusable machine learning code.

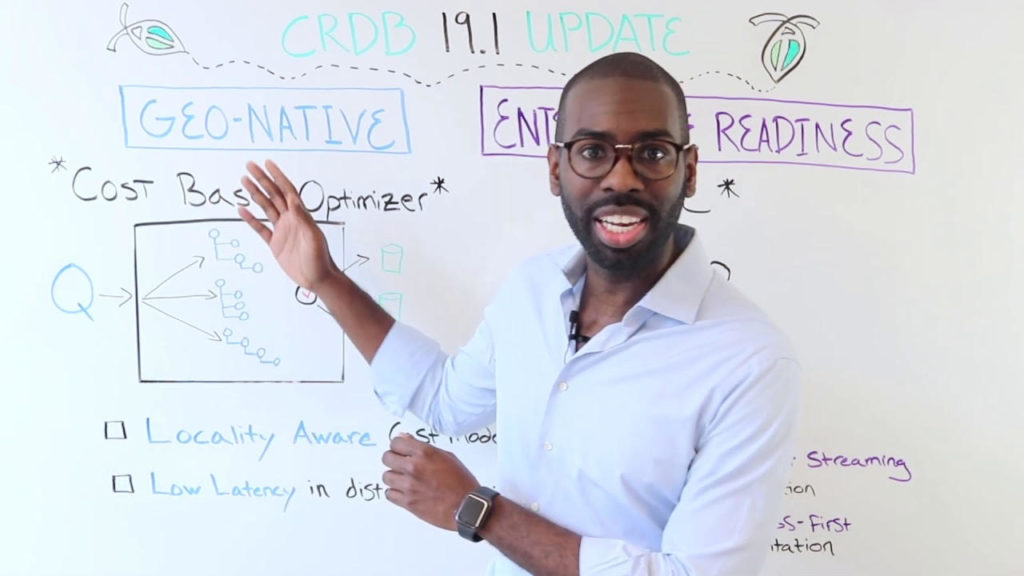

At our most recent Data Driven NYC, we had the great pleasure of hosting Yetunde Dada, a Principal Product Manager at QuantumBlack, who has been the key driving force behind Kedro.

Below is the video and below that, the transcript.

Continue reading “Introducing Kedro: Yetunde Dada, Principal Product Manager at QuantumBlack”